Fred Huffman Peels Back the Layers of OSI

We can say unequivocally that if you ever find yourself stuck between OSI Layer 2 and Layer 3, it’s good to have a guy like Fred along. He has a background in TV studio and transmission engineering starting with the RCA Broadcast Division and has also worked in telecommunications as a network systems engineer for MCI International. Fred continues to stay active in the industry as a respected broadcast engineering consultant.

We can say unequivocally that if you ever find yourself stuck between OSI Layer 2 and Layer 3, it’s good to have a guy like Fred along. He has a background in TV studio and transmission engineering starting with the RCA Broadcast Division and has also worked in telecommunications as a network systems engineer for MCI International. Fred continues to stay active in the industry as a respected broadcast engineering consultant.

WS: We talked before about how IP audio networking is fairly well established in the broadcast studio and overall. What is needed for video IP to reach that same kind of adoption?

FH: I believe something akin to AES67 is needed for video. (AES67-2013 provides interoperability between devices connected across a communications link. It uses IEEE 1588 – 2008 PTPv2, or Precision Time Protocol standard, as the master clock reference.) For Ethernet to be able to transport SDI, or anything for that matter that requires continuity, its performance has to be made deterministic. The broadcast industry is going in the right direction. Sony and Cisco, who are both respectable engineering entities, are said to prefer SMPTE 2059, which also relies on PTP. But it’s almost beside the point, because the network has to be capable of transporting real-time content and, it (the network) runs on a completely separate, independent time base.

WS: You briefly mentioned before that broadcast operations and IT have essentially converged, but that it’s not often apparent because we don’t always share the same terms. Explain what you mean.

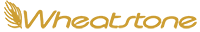

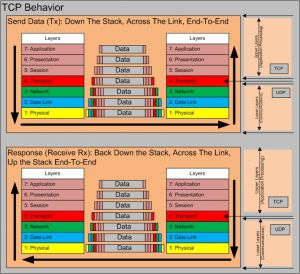

FH: Maybe the best example, one of many, is the term file-based workflow. What we’re really talking about are file transfers that use FTP protocols. That’s important to know because in broadcasting, we have two kinds of content transport: real-time, prerecorded live streams that rely on UDP and non-real-time file transfers that rely on TCP. That gets us into Layer 4 of the OSI stack, commonly known as the layer where these two protocols function. It is also something of a dividing line between Upper and Lower Stack functions where Upper Stack application processing occurs and Lower Stack processing that deals with Communications Link processing.

WS: That is a good example. Both those transport protocols are useful for moving audio content from one place to another in the network.

FH: Right. We have content in a file on a file server and at some point, it is going to get played back in real-time, or content originating live. Regardless, it has to be synchronous if you are to mix and perform special effects and so forth. That’s one time base. But the minute it gets on the network, the network has to be able to handle that live material; it has to temporarily store it and transfer it from its native time base into the network transport which has its own unrelated time base. The transition from the audio/video system time base to the network time base is absolutely critical. At the other end of the communications link we have a second application processor. It takes a while to understand that, and it sometimes takes longer for IT and telecom people to understand it because they don’t understand the basics of the pixel and frame structure. They kind of get the audio part of it because they’re used to dealing with the digital nature of voice over IP or digital phone calls. But there has to be this understanding of how it moves up and down the (OSI) stack and across the network, end to end.

WS: Our readers might be sorry I asked, but how so?

FH: (Laughs). I call it the quandary of bits, bytes and frames. If you start at the top of the OSI stack, you have bits being processed in groups called bytes. These entities move from one layer to another as they get stripped of their native synchronization bits/bytes and placed into packets. As you know, Layer 1 to Layer 4 is typically referred to as lower stack operations and Layer 5 through 7, upper stack. TCP is differentiated from UDP, because it includes mostly upper stack processing activity by two processors at each end of a communications link. Thus the term interoperability. The point is, after all this processing of bits, resulting in bytes being placed into packets, you end up in Ethernet frames at Layer 2, which is basic network transport frame structure.

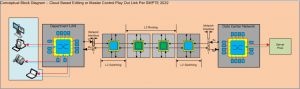

Take SMPTE 2022-6, which is typically audio and video essence in a serial digital structure. This structure is parsed into 1,376 byte packets, time-stamped and encapsulated into numbered media packets, sans synchronizing information. Each packet is then inserted into a Layer 2 Ethernet Frame which passes the packets to a Layer 3 Router that may be only the first node in a multi-node network link to a Layer 2 switch that connects to a network interface card (NIC) where the content makes its way up the stack to the other host where it stored, processed or played out to a display, speaker or used in a production.

Take SMPTE 2022-6, which is typically audio and video essence in a serial digital structure. This structure is parsed into 1,376 byte packets, time-stamped and encapsulated into numbered media packets, sans synchronizing information. Each packet is then inserted into a Layer 2 Ethernet Frame which passes the packets to a Layer 3 Router that may be only the first node in a multi-node network link to a Layer 2 switch that connects to a network interface card (NIC) where the content makes its way up the stack to the other host where it stored, processed or played out to a display, speaker or used in a production.

So we have this bits, bytes, frames structure that moves (sends) data down the stack, across a network, back up the stack end-to-end. It isn’t rocket science as some might claim, but it does require basic knowledge of how computer and network processing function. UDP is continuous and unlike TCP, doesn’t have to stop and wait for a response or acknowledgement from the receiving end processor before sending the next window or group of bits/bytes/packets.

Back to the SDI over IP example. The process starts at the application layer, Layer 7, where it is converted into media packets which are compatible with standard IP network transport packet structure, carried across the network, passed up the stack where the application process converts it back to standard SDI and AES essence and if present, captioning, metadata or other related signals.

One final point: Standard IP packets are 32 byte or 128 byte entities that have source and destination information in them and a few other overhead bits, but without any synchronization information. It is up to the network to take standard IP compliant packets and transport them to their destination without loss or undue delay. If you take a video file and you are going to play back this material and mix in special effects or put titles over it in a production switcher, or mix the audio with another digitized audio signal, it all has to be synchronous. It has to be clocked so the network can make sense of it –keep it straight if you will. That’s where these other standards and protocols (Ethernet, IP) come in handy. And knowing about the OSI stack and how to use it as a tool can help us design and build IP based systems as well as fix them when they break, or fail to perform to a satisfactory level.

WS: As you know, Wheatstone has been making routing console systems since the early 2000s. But you have a rather extensive history too, given your, what, 50-plus years in the field?

FH: Actually, the period I think of often is that point around ‘98 or 2000, when SMPTE was struggling with digital standards and getting introduced to digital networking. But yes, years before that, in 1984, I had the privilege to get involved in planning and design for RCA to put in its own network and take out AT&T as a service provider. As you’ll recall, the AT&T divestiture took place around then. We built a teleconferencing room in our building as a model example. You’ll find this interesting. The stuff at the time took 6 or 8 megabits of bandwidth, multiple T1s, and the encoding and decoding or compression equipment cost in the hundreds of thousands of dollars.

WS: Whew. Thanks for that bit of history. It makes us appreciative of all that we can do with IP and for a whole lot less.

Fred Huffman is the principal consultant at Huffman Technical Services. He’s the author of “Ethernet Matters . . .” a series of booklets that introduce Ethernet in Broadcast Content Creation and Distribution Facility architecture and design. He can be reached at This email address is being protected from spambots. You need JavaScript enabled to view it. or 732-787-5462.